The aim of supervised machine learning is being able to automatically learn relationships between variables, and use this learned relationship to predict new data.

To do so, we could use all the data we already have to build and

train our model, and then go out in the world and gather new data to

test if our model makes good predictions.

However, even if this strategy may look so straightforward, it has (at least!) two major flows: first, it requires a lot of time and effort to gather new data out in the wild each time we want to try a new model; and second, our algorithm would be -hopefully- pretty good in modeling the data we have, but it will almost certainly return much worse results on new, previously unseen data (this is called overfitting, see below).

Divide et Impera: Training Set & Test Set

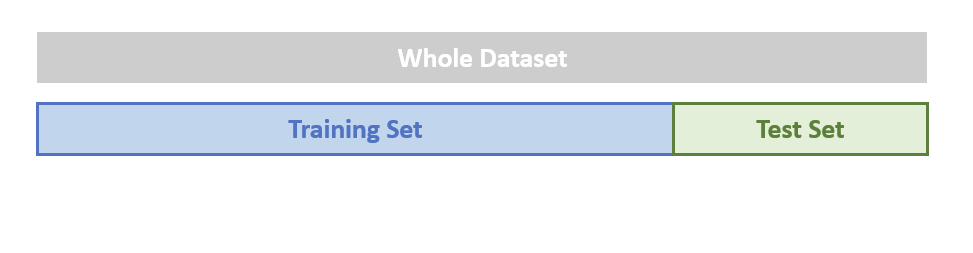

That is why one of the basic procedures in machine learning consists in splitting the data we have in two separate datasets, the train set and the test set.

The Training Set will be used to train the model, and then we will use the Test Set to evaluate the model performance on observations never seen by the learning algorithm, therefore limiting its possibilities of overfitting, which refers to the tendency that models have to “remember” the specific observations they saw during training instead of capturing deeper relationships between variables, which is crucial to generalize to new data.

Normally, the train test is obtained by randomly sampling ~80% of all observations in our original dataset, leaving the remaining ~20% to the test set.

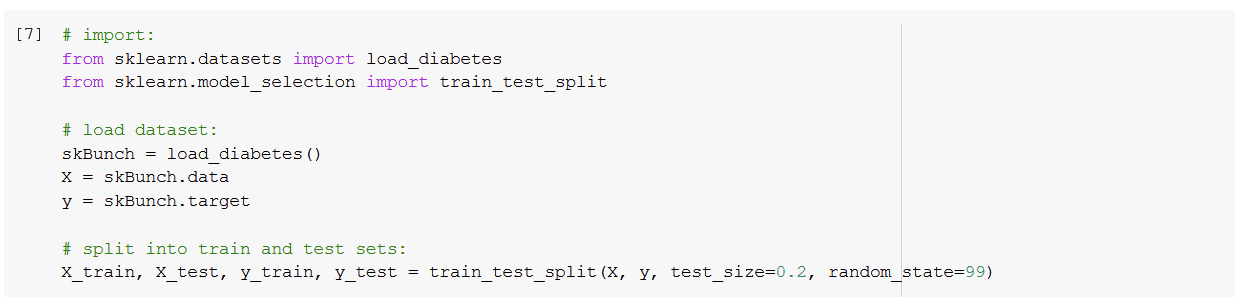

In sklearn, performing the split into train and test set is as easy as importing the train_test_split function, and apply it to our original dataset (here we use sklearn's Diabetes toy dataset as example)

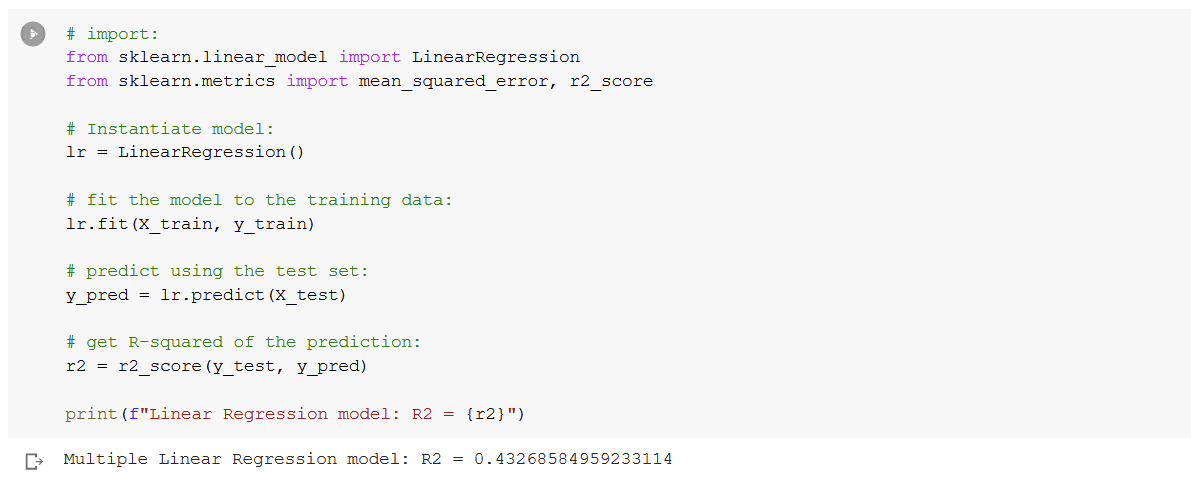

After that, we will train our model (a Linear Regression in this case) on the train split, and test it by predicting using the test set:

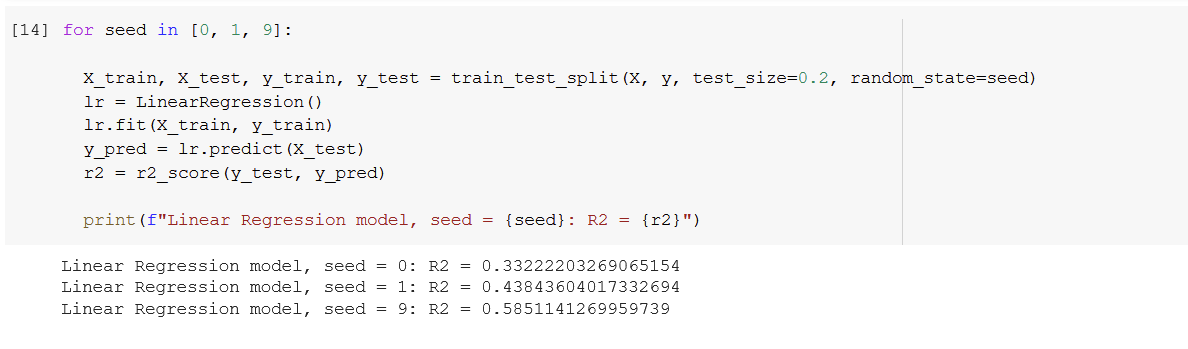

But ... what would happen if we would use a different subset of our data to train our model?

would we get a different performance (in term of

we can quickly check this by passing a different seed to the function train_test_split (the argument to change is random_state)

As we can see, we get very different performances depending on the particular train-test split, i.e. depending to the particular observations that are included/excluded into the two sets. How can we avoid this issue? by using Cross-Validation!

Cross-Validation

Cross-Validation is basically a resampling method.

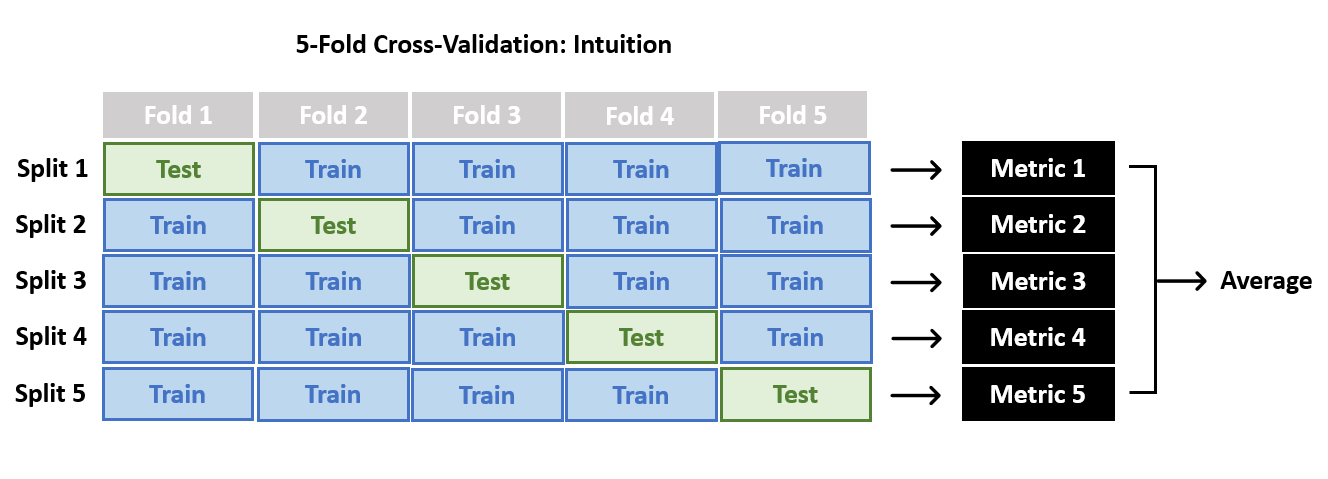

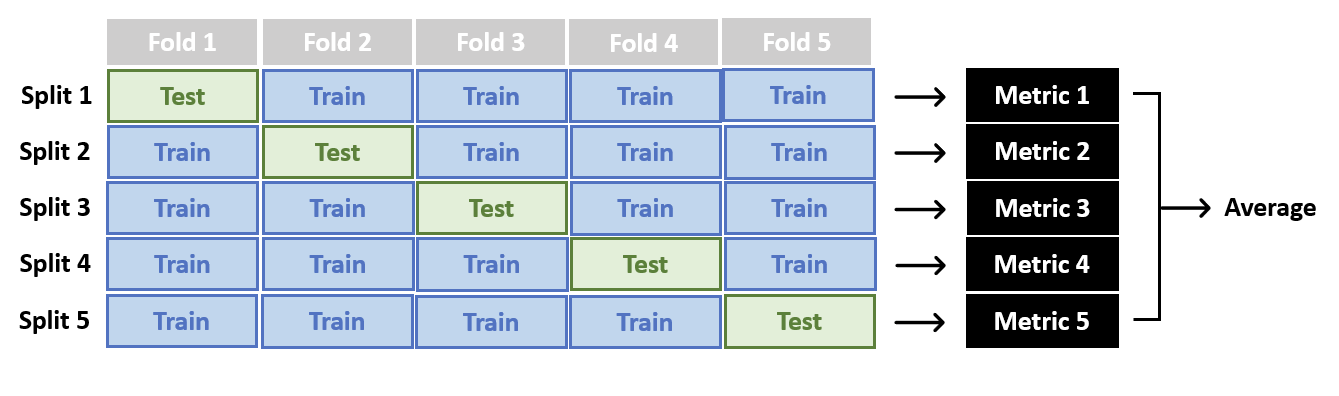

In k-Fold Cross-Validation, we divide the dataset into k "folds", i.e. subsets, each of which has the same number of observations. We then use k-1 folds to train our model, and the other one to test it. We then repeat this procedure k times, each time using a different set of obervations as the test set. At the end, we average all peformance metrics obtained for the k folds. Standard values for k are typically 5 or 10. When k=1, the technique is known as Leave-One-Out Cross-Validation.

By doing this, we greatly reduced chances of overfitting, and we also reduced the model variance, i.e. the fact that the performance metrics we get may change (even wildly) because of the particular set of observations that we are using to train the model.

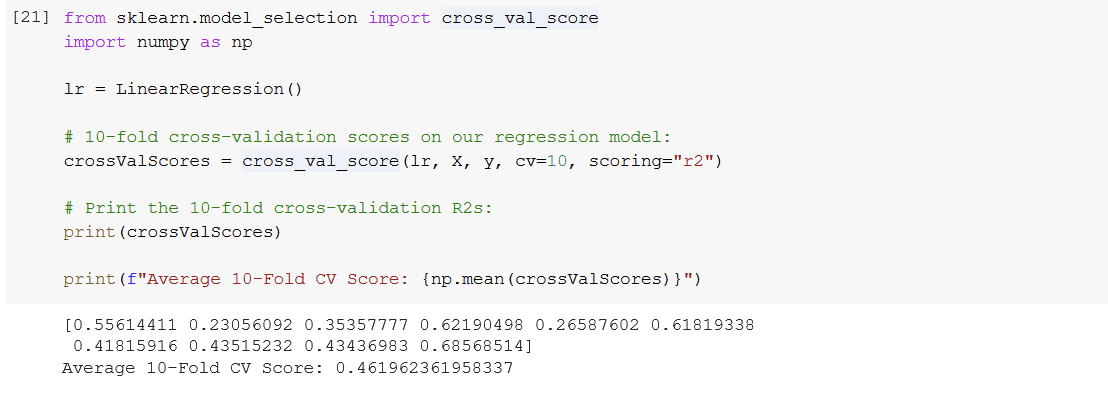

In sklearn we can perform k-fold CV as:

More on Cross-Validation